The following is an article I had published in the Sept 20 edition of U.S 1 Magazine

Say Again? Voco Says Yes

by Melissa Drift

The video has garnered more than 1 million views on You Tube since it was uploaded in November of 2016: Television personality and comedian Jordan Peele and Adobe’s community engagement manager Kim Chambers look on as Princeton graduate student Zeyu Jin presents a groundbreaking new software program that he is developing.

The presentation took place last year at the annual Adobe Max, a weeklong convention where the software company showcases its new products and features. Adobe is known for its digital editing programs, several of which are industry standard in fields like broadcasting, movie production, and web design.

The new application is called VoCo, and it promises to revolutionize audio production the way that Adobe’s iconic PhotoShop program did with photography. The user can type words that were not said in an original recording, and the program will generate a new recording of those words in the voice of the original speaker. With VoCo software you can make natural-sounding audio samples of people saying words and phrases that they never actually said.

Back to the 2016 video: The crowd laughs and cheers as Jin plays a recording of comedian Michael Key from the Comedy Central show, “Key and Peele.” Jin and the hosts cheerfully exchange jokes as he demonstrates how he can quickly change the recording to make Key say that he “kissed Jordan three times,” when the comedian has never actually spoken those words. At the end of the demonstration, Peele jokingly says to Jin, “you could get in big trouble for something like this!”

Surely, the ability to change audio recordings in this way brings up many questions. Jin and his faculty advisor, Princeton professor of computer science Adam Finkelstein, are concerned about this issue and say they are working on several different ways to detect whether their program has been used on a recording. “When we show people this project, many people immediately have that reaction, like ‘oh no, you could make somebody say something that they never said,’ and so we have to have this conversation,” Finkelstein says. “It’s not too shocking or surprising.”

Finkelstein says it was the same way when photo editing programs like Adobe’s PhotoShop came out. “People want to know, has this photo been edited, right? When PhotoShop came out all of a sudden it was possible to do all kinds of crazy things.” He refers to the public outcry over the February, 1982, cover of National Geographic as an example. The magazine had used digital editing to move two of the Egyptian pyramids slightly closer together in order to fit on the cover. “People have been asking these questions since the beginning of digital editing,” he says.

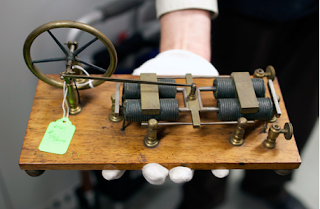

Speech synthesis and the ability to edit recordings are not new. It has been technologically possible to change recordings and even manufacture new words since the beginning of analog tape recording. Skilled radio and music producers would physically cut and reattach pieces of audio tape to rearrange or delete unwanted words or sounds in the recording. It was a laborious process, but it worked well enough.

The advent of digital technology, starting in the 1980s, changed everything. Broadcasters and amateurs alike now routinely use editing programs such as Adobe Audition, a standard in the industry, to easily fix recording mistakes, remove unwanted background sounds, and otherwise modify recordings. This ushered in a creative boon for amateur artists. Many things that were once only possible to do in a studio are now done quickly and cheaply by high school kids in their bedrooms.

Since the advent of YouTube 10 years ago, homemade movies and music have become mainstream. A notable example is the Japanese music composition program called Vocaloid, which shares some similarities to the VoCo project. The program allows users to make a series of stock synthesized voices sing whatever words and melodies the user wants them to. Each synthesized voice used in the program is represented as a different anime cartoon character. The artificial voices are manufactured by using sample recordings of real Japanese singers. In the past decade, Vocaloid music has become its own distinct genre. Composers typically make songs about creepy horror-related subject matter. The voices tend to sound child-like and robotic.

Addressing the difference between Vocaloid and VoCo, Jin says it’s easier to edit a singing voice than it is to edit speech. “Vocaloid synthesizes a pretty robotic voice, if you listen to them. But people like it because it sounds a little bit less human and very interesting. But they can do a lot of stuff like singing all kinds of styles and syllables. For speech, it’s actually way harder to make it more human-like. When you are singing the pitch is pretty stable per note. And the stable pitch is very easy to synthesize with a computer. The computer can just make a flat pitch or a pitch with a little bit of fluctuation to make it a little bit more human like. But for speech, the pitch is everywhere. We’re still doing the research on how people choose pitch in saying things. This is still a pretty open question.”

Currently if you want to fix a mistake or add new words to a recording you have two options: either invite the speaker or voiceover talent to redo the recording or manually fix the mistake yourself, either of which can be time consuming and cost prohibitive. If you want to change a recording to perhaps create a new word, you would have to hunt through the recording to find all the bits and pieces of sounds you need to create the new word and cut and paste them together. This can also be a very time consuming and costly process.

In order for it to work, all the sounds you need have to be present in the original recording. Jin says it would take around 30 hours of recordings for companies like Google and Apple to make their synthesized voices. Says Jin: “The problem [with those programs] is they only support one specific voice. You can’t make a Siri of your own voice.”

VoCo makes this process much faster and cheaper, and it can recreate any voice. The VoCo program only needs only a 20 to 40-minute audio sample. This is because VoCo works differently from other voice synthesis programs. The user first provides a written transcript of the recording. Unlike other programs, VoCo starts by generating a generic synthetic voice to match the written transcript. The program breaks down the original voice recording into small sound snippets called phonemes, which are the building blocks of words. The artificially generated voice is then used as guide to find the correct phonemes and build them into words. You type the words you want to change, and the program does so automatically. It’s similar to the find and replace feature in Microsoft Word.

Says Finkelstein: “It’s true that we’re making it easier to do this thing that could cause people to believe that somebody said something that they didn’t say. It is true that we’re moving toward making it easier to do that, and it’ll take time for people to adjust to that idea, just as it took time for people to adjust to the idea that you could move a pyramid in PhotoShop” — something unremarkable today.

Originally from Anhui, China, Jin, 28, has been in the United States for the past six years. He spent the first two years in Pittsburgh, earning his master’s degree in machine learning at Carnegie Mellon University. He is now a fourth year graduate student, working toward a Ph.D in computer science at Princeton University. Several music keyboards once used by the Princeton Laptop Orchestra are scattered on the desk in his office. Music and composition, he says, are among his many eclectic interests that led to his career in computer science and the VoCo project.

Jin, whose mother is a government worker and whose father works for Chery Motors, a Chinese car company in which he played a founding role, started playing with computers at the age of three and started the piano at four. He also plays the flute. He learned computer programming in elementary school. Jin says he didn’t really like playing the piano, at first, though. He likes music, but he found the traditional process of learning to play an instrument to be boring and repetitive. He wanted to find a way to use computers to make it easier. That’s what led him to this work. Jin cites his uncle with a Ph.D in computer science as a childhood influence.

At the age of 17 Jin released several albums of Celtic music in China. His passion for the music started when he got into the Norwegian music group Secret Garden as a kid, after the group had won the Eurovision song contest. “It’s very funny I listened to Secret Garden. That’s pretty classic Norwegian music and I was like ‘holy crap this is great music.’ So I kept listening to more and more and then I was thinking OK I’m going to write some music and that’s when I got started. So I was highly influenced by European music when I was in China.”

His albums weren’t especially popular. “People like pop music, and I’m writing Celtic music. And most Chinese people don’t know what Celtic music is. It’s very Irish-Scottish. It’s so lively.” He was surprised to receive some recognition for his music after moving to the U.S. He submitted one of his Celtic pieces to a music competition in Pittsburgh when he was living there and won second place. “There people don’t listen to Celtic music much. So with just like 30 or 40 endorsements or something, I was ranked second in [the competition]. I was like how can that happen, and it’s under the Celtic genre, and no one is listening to Celtic music?”

During his time at Carnegie Mellon Jin helped create an app called Music Prodigy. The app teaches people to play piano or guitar by automatically detecting the notes they play. His academic advisor there, Roger Denenburg, was the chief scientific officer of the music education startup. “He was doing all the scientific stuff there, and he brought me in because I could help with a little bit of development. And that’s when we actually got connected. After one year we realized that my knowledge can actually improve what’s being done there, so I got more involved with the company, with the core technology.”

Four years ago Jin came to Princeton intending to do music-related research in the Princeton Sound Lab (now defunct, the lab had conducted research in computer-generated music). He had originally wanted to make a program that could turn recordings of the human voice into musical instruments, especially the violin. In his office, Jin plays a recording of himself humming a simple melody. He had transformed it to sound like a violin. It sounded impressively realistic. He said the reason for that is because it allows the emotion in the user’s voice to come through in a way that can’t be emulated with any existing type of music synthesizer.

Jin says he has put this music project on the back burner to focus on VoCo for now, but it works on a very similar principle. “The method is I recorded a real violin but the violin is basically [only] playing scales.” He sings a scale to demonstrate. “That’s the training data. That’s the material we need to make a more natural violin sound for converting your voice.”

After the Sound Lab disbanded Jin had to find a new faculty advisor. Adam Finkelstein, a Swarthmore alumnus, Class of 1987, and a University of Washington Ph.D. who has been at Princeton since 1997, decided to take Jin on board. Before VoCo, Finkelstein was mostly known for his work with another graduate student on a project called Patch Match. That work led to what eventually became the content aware fill feature in PhotoShop.

While teaching at Princeton, Finkelstein has taken several sabbaticals at Adobe, Pixar, and Google.

The main focus of Finkelstein’s research had been visual applications like video, animation, and computer graphics. He didn’t intend to change that. “How I thought it would work was that I thought I would convince Zeyu to work in video. Because in a way, audio and video are certainly related,” Finkelstein says. “There were some things I wanted to do in video editing. In fact he did start working on those things. I thought I would get him interested in working on video, but in the end what happened was Zeyu got me interested in working on audio. He sort of pulled me over to that side.”

Jin had been working with Finkelstein on some of his video-related research when they realized the need for a program like VoCo. “I’ll tell you why I’m interested in it,” Finkelstein says. It’s “because many, many times, I have made a narration for a video. I record the audio by reading a script into a microphone and then later we go and edit my recording, and I sometimes wish that I had said something a little differently, and it’s really hard to go back and re-record. So right from the very beginning I wanted this project to work because I wanted to edit my own voice in my own narrations to adjust what I was saying during the editing process.”

With existing technology “you have two choices: Live with what you recorded or go back and re-record. But when you go back and re-record, it’s very difficult to get the voice quality to match well because the microphone settings are a little bit different and the layout of the room is a little different and it just sounds different. Not only is it a pain to set up the microphone and set up the room and re-record the one sentence you wanted to change, but then you put it in there and it sounds different. It’s so much easier if I could just type the word,” and, he adds, the sound matches better.

Researchers are developing other features to help mismatched audio sound better, but they are still not very user friendly. Being able to type what you want makes VoCo’s way very convenient. “Maybe, eventually, commercial software will be able to let you do that too, but even so, it’s still a pain to go back and re-record it. So I’d rather just be able to type what I want it to say.”

The researchers at VoCo are not quite at the point where they can magically reanimate the voices of the dead — though that is one of their ultimate goals. It is research that Jin is doing for his Ph.D, which he expects to receive next year. Jin presented this project at the ACM SIGRAPH conference in July, and his paper has appeared in the ACM Transactions on Graphics journal. Finkelstein says that at this point, he is not even sure which Adobe product it will be used in, or if it will even be used at all.

“The truth is that we’re working on being able to synthesize longer sentences, but right now we can only typically synthesize one or two words, a short phrase before it starts to sound awkward or bad. And it’s not always even successful with a short word.” They need at least a 20-minute recording to make the audio samples of new words. In the paper, they tested study participants to see how often they could distinguish words and phrases edited by VoCo from those that were unedited. They couldn’t tell the difference more than 60 percent of the time, on average.

Their next goal is to generate full sentences. “It’s a work in progress, but I’d like us to eventually be able to have the ability that you could type a full sentence and have it sound reasonably natural in somebody’s voice,” Finkelstein says. “I think a careful listener even just with their ear can hear it. We may also want to work on the project that tries to automatically detect if this operation has been done.”

Jin says they are working on two potential approaches to detect whether VoCo has been used on an audio recording. One potential solution that they have not tested yet is to embed an audio fingerprint into the words that have been synthesized by VoCo. An inaudible frequency is embedded into the sound and can only be extracted by computer analysis. “Even if you make a filter to edit that synthesized word, it won’t remove the fingerprint,” says Jin. “So we can always recover the fingerprint and say this has been edited.” He thinks that’s the best approach. A person would have to be an expert at audio editing to figure out how to remove it.

He says they could make the exact frequency and how it was programmed a secret, like a password, so people wouldn’t be able to figure it out. They haven’t actually begun to work on that part of it, though. “There is a shortcoming to that approach,” Jin says. “Because this paper is out, some people can implement VoCo and make their own version so they can just get out of any censorship. So to address that problem, our actual approach is to use deep learning to learn the pattern of the VoCo algorithm. When a VoCo-like algorithm is editing the waveform there is a trace of how it is edited inside the waveform. It’s very subtle.

“But we believe that we can actually use deep learning to find out what trace this editing process gives you. And actually in our lab experiments we’ve found that it’s pretty effective for finding VoCo-like editing. Even human editing can be detected. Like you actually re-record that voice and you manually insert that word back, we can also detect that edit as well.”

Deep learning is machine learning based on neural networks. Here’s how it works: “We create all kinds of examples of VoCo-edited words and non-edited VoCo words and we give the computer a lot of samples and teach it how to recognize whether an audio clip is edited or not — basically training it through examples,” Jin says.

The ability to turn your voice into a violin is one potential side use for the VoCo technology. Finkelstein and Jin are also excited about the possibility of using VoCo to return the original voices to people who can no longer speak. People in this situation have to use a generic artificial voice that takes a very long time to make — physicist Stephen Hawking, for example. When film critic Roger Ebert lost his voice due to cancer, a team of Scottish audio engineers worked to create a synthesized voice that actually sounded like him. They were only able to do so, however, because they had hours upon hours of recordings from his years on television. VoCo could allow people with disorders such as ALS or cancer to use their own voice much more quickly and at a lower cost.

Jin stresses that the VoCo project is still far from completion. “We’ve had a lot of very public attention recently, but I feel like there’s a lot of things to be done before we can say this is something you can use in your project. In time, we will finally make it happen, but that YouTube video last year, that was the so-called Adobe sneak, which means it’s technology that’s brewing in the lab. But it’s something still in the lab, so it’s not like a product or anything like that.” He said he wouldn’t expect it to be a product for “quite a few years.”

“We can’t comment on whether or not VoCo will be used in any Adobe product. We can only say that we hope it will,” Finkelstein says.

The presentation took place last year at the annual Adobe Max, a weeklong convention where the software company showcases its new products and features. Adobe is known for its digital editing programs, several of which are industry standard in fields like broadcasting, movie production, and web design.

The new application is called VoCo, and it promises to revolutionize audio production the way that Adobe’s iconic PhotoShop program did with photography. The user can type words that were not said in an original recording, and the program will generate a new recording of those words in the voice of the original speaker. With VoCo software you can make natural-sounding audio samples of people saying words and phrases that they never actually said.

Back to the 2016 video: The crowd laughs and cheers as Jin plays a recording of comedian Michael Key from the Comedy Central show, “Key and Peele.” Jin and the hosts cheerfully exchange jokes as he demonstrates how he can quickly change the recording to make Key say that he “kissed Jordan three times,” when the comedian has never actually spoken those words. At the end of the demonstration, Peele jokingly says to Jin, “you could get in big trouble for something like this!”

Surely, the ability to change audio recordings in this way brings up many questions. Jin and his faculty advisor, Princeton professor of computer science Adam Finkelstein, are concerned about this issue and say they are working on several different ways to detect whether their program has been used on a recording. “When we show people this project, many people immediately have that reaction, like ‘oh no, you could make somebody say something that they never said,’ and so we have to have this conversation,” Finkelstein says. “It’s not too shocking or surprising.”

Finkelstein says it was the same way when photo editing programs like Adobe’s PhotoShop came out. “People want to know, has this photo been edited, right? When PhotoShop came out all of a sudden it was possible to do all kinds of crazy things.” He refers to the public outcry over the February, 1982, cover of National Geographic as an example. The magazine had used digital editing to move two of the Egyptian pyramids slightly closer together in order to fit on the cover. “People have been asking these questions since the beginning of digital editing,” he says.

Speech synthesis and the ability to edit recordings are not new. It has been technologically possible to change recordings and even manufacture new words since the beginning of analog tape recording. Skilled radio and music producers would physically cut and reattach pieces of audio tape to rearrange or delete unwanted words or sounds in the recording. It was a laborious process, but it worked well enough.

The advent of digital technology, starting in the 1980s, changed everything. Broadcasters and amateurs alike now routinely use editing programs such as Adobe Audition, a standard in the industry, to easily fix recording mistakes, remove unwanted background sounds, and otherwise modify recordings. This ushered in a creative boon for amateur artists. Many things that were once only possible to do in a studio are now done quickly and cheaply by high school kids in their bedrooms.

Since the advent of YouTube 10 years ago, homemade movies and music have become mainstream. A notable example is the Japanese music composition program called Vocaloid, which shares some similarities to the VoCo project. The program allows users to make a series of stock synthesized voices sing whatever words and melodies the user wants them to. Each synthesized voice used in the program is represented as a different anime cartoon character. The artificial voices are manufactured by using sample recordings of real Japanese singers. In the past decade, Vocaloid music has become its own distinct genre. Composers typically make songs about creepy horror-related subject matter. The voices tend to sound child-like and robotic.

Addressing the difference between Vocaloid and VoCo, Jin says it’s easier to edit a singing voice than it is to edit speech. “Vocaloid synthesizes a pretty robotic voice, if you listen to them. But people like it because it sounds a little bit less human and very interesting. But they can do a lot of stuff like singing all kinds of styles and syllables. For speech, it’s actually way harder to make it more human-like. When you are singing the pitch is pretty stable per note. And the stable pitch is very easy to synthesize with a computer. The computer can just make a flat pitch or a pitch with a little bit of fluctuation to make it a little bit more human like. But for speech, the pitch is everywhere. We’re still doing the research on how people choose pitch in saying things. This is still a pretty open question.”

Currently if you want to fix a mistake or add new words to a recording you have two options: either invite the speaker or voiceover talent to redo the recording or manually fix the mistake yourself, either of which can be time consuming and cost prohibitive. If you want to change a recording to perhaps create a new word, you would have to hunt through the recording to find all the bits and pieces of sounds you need to create the new word and cut and paste them together. This can also be a very time consuming and costly process.

In order for it to work, all the sounds you need have to be present in the original recording. Jin says it would take around 30 hours of recordings for companies like Google and Apple to make their synthesized voices. Says Jin: “The problem [with those programs] is they only support one specific voice. You can’t make a Siri of your own voice.”

VoCo makes this process much faster and cheaper, and it can recreate any voice. The VoCo program only needs only a 20 to 40-minute audio sample. This is because VoCo works differently from other voice synthesis programs. The user first provides a written transcript of the recording. Unlike other programs, VoCo starts by generating a generic synthetic voice to match the written transcript. The program breaks down the original voice recording into small sound snippets called phonemes, which are the building blocks of words. The artificially generated voice is then used as guide to find the correct phonemes and build them into words. You type the words you want to change, and the program does so automatically. It’s similar to the find and replace feature in Microsoft Word.

Says Finkelstein: “It’s true that we’re making it easier to do this thing that could cause people to believe that somebody said something that they didn’t say. It is true that we’re moving toward making it easier to do that, and it’ll take time for people to adjust to that idea, just as it took time for people to adjust to the idea that you could move a pyramid in PhotoShop” — something unremarkable today.

Originally from Anhui, China, Jin, 28, has been in the United States for the past six years. He spent the first two years in Pittsburgh, earning his master’s degree in machine learning at Carnegie Mellon University. He is now a fourth year graduate student, working toward a Ph.D in computer science at Princeton University. Several music keyboards once used by the Princeton Laptop Orchestra are scattered on the desk in his office. Music and composition, he says, are among his many eclectic interests that led to his career in computer science and the VoCo project.

Jin, whose mother is a government worker and whose father works for Chery Motors, a Chinese car company in which he played a founding role, started playing with computers at the age of three and started the piano at four. He also plays the flute. He learned computer programming in elementary school. Jin says he didn’t really like playing the piano, at first, though. He likes music, but he found the traditional process of learning to play an instrument to be boring and repetitive. He wanted to find a way to use computers to make it easier. That’s what led him to this work. Jin cites his uncle with a Ph.D in computer science as a childhood influence.

At the age of 17 Jin released several albums of Celtic music in China. His passion for the music started when he got into the Norwegian music group Secret Garden as a kid, after the group had won the Eurovision song contest. “It’s very funny I listened to Secret Garden. That’s pretty classic Norwegian music and I was like ‘holy crap this is great music.’ So I kept listening to more and more and then I was thinking OK I’m going to write some music and that’s when I got started. So I was highly influenced by European music when I was in China.”

His albums weren’t especially popular. “People like pop music, and I’m writing Celtic music. And most Chinese people don’t know what Celtic music is. It’s very Irish-Scottish. It’s so lively.” He was surprised to receive some recognition for his music after moving to the U.S. He submitted one of his Celtic pieces to a music competition in Pittsburgh when he was living there and won second place. “There people don’t listen to Celtic music much. So with just like 30 or 40 endorsements or something, I was ranked second in [the competition]. I was like how can that happen, and it’s under the Celtic genre, and no one is listening to Celtic music?”

During his time at Carnegie Mellon Jin helped create an app called Music Prodigy. The app teaches people to play piano or guitar by automatically detecting the notes they play. His academic advisor there, Roger Denenburg, was the chief scientific officer of the music education startup. “He was doing all the scientific stuff there, and he brought me in because I could help with a little bit of development. And that’s when we actually got connected. After one year we realized that my knowledge can actually improve what’s being done there, so I got more involved with the company, with the core technology.”

Four years ago Jin came to Princeton intending to do music-related research in the Princeton Sound Lab (now defunct, the lab had conducted research in computer-generated music). He had originally wanted to make a program that could turn recordings of the human voice into musical instruments, especially the violin. In his office, Jin plays a recording of himself humming a simple melody. He had transformed it to sound like a violin. It sounded impressively realistic. He said the reason for that is because it allows the emotion in the user’s voice to come through in a way that can’t be emulated with any existing type of music synthesizer.

Jin says he has put this music project on the back burner to focus on VoCo for now, but it works on a very similar principle. “The method is I recorded a real violin but the violin is basically [only] playing scales.” He sings a scale to demonstrate. “That’s the training data. That’s the material we need to make a more natural violin sound for converting your voice.”

After the Sound Lab disbanded Jin had to find a new faculty advisor. Adam Finkelstein, a Swarthmore alumnus, Class of 1987, and a University of Washington Ph.D. who has been at Princeton since 1997, decided to take Jin on board. Before VoCo, Finkelstein was mostly known for his work with another graduate student on a project called Patch Match. That work led to what eventually became the content aware fill feature in PhotoShop.

While teaching at Princeton, Finkelstein has taken several sabbaticals at Adobe, Pixar, and Google.

The main focus of Finkelstein’s research had been visual applications like video, animation, and computer graphics. He didn’t intend to change that. “How I thought it would work was that I thought I would convince Zeyu to work in video. Because in a way, audio and video are certainly related,” Finkelstein says. “There were some things I wanted to do in video editing. In fact he did start working on those things. I thought I would get him interested in working on video, but in the end what happened was Zeyu got me interested in working on audio. He sort of pulled me over to that side.”

Jin had been working with Finkelstein on some of his video-related research when they realized the need for a program like VoCo. “I’ll tell you why I’m interested in it,” Finkelstein says. It’s “because many, many times, I have made a narration for a video. I record the audio by reading a script into a microphone and then later we go and edit my recording, and I sometimes wish that I had said something a little differently, and it’s really hard to go back and re-record. So right from the very beginning I wanted this project to work because I wanted to edit my own voice in my own narrations to adjust what I was saying during the editing process.”

With existing technology “you have two choices: Live with what you recorded or go back and re-record. But when you go back and re-record, it’s very difficult to get the voice quality to match well because the microphone settings are a little bit different and the layout of the room is a little different and it just sounds different. Not only is it a pain to set up the microphone and set up the room and re-record the one sentence you wanted to change, but then you put it in there and it sounds different. It’s so much easier if I could just type the word,” and, he adds, the sound matches better.

Researchers are developing other features to help mismatched audio sound better, but they are still not very user friendly. Being able to type what you want makes VoCo’s way very convenient. “Maybe, eventually, commercial software will be able to let you do that too, but even so, it’s still a pain to go back and re-record it. So I’d rather just be able to type what I want it to say.”

The researchers at VoCo are not quite at the point where they can magically reanimate the voices of the dead — though that is one of their ultimate goals. It is research that Jin is doing for his Ph.D, which he expects to receive next year. Jin presented this project at the ACM SIGRAPH conference in July, and his paper has appeared in the ACM Transactions on Graphics journal. Finkelstein says that at this point, he is not even sure which Adobe product it will be used in, or if it will even be used at all.

“The truth is that we’re working on being able to synthesize longer sentences, but right now we can only typically synthesize one or two words, a short phrase before it starts to sound awkward or bad. And it’s not always even successful with a short word.” They need at least a 20-minute recording to make the audio samples of new words. In the paper, they tested study participants to see how often they could distinguish words and phrases edited by VoCo from those that were unedited. They couldn’t tell the difference more than 60 percent of the time, on average.

Their next goal is to generate full sentences. “It’s a work in progress, but I’d like us to eventually be able to have the ability that you could type a full sentence and have it sound reasonably natural in somebody’s voice,” Finkelstein says. “I think a careful listener even just with their ear can hear it. We may also want to work on the project that tries to automatically detect if this operation has been done.”

Jin says they are working on two potential approaches to detect whether VoCo has been used on an audio recording. One potential solution that they have not tested yet is to embed an audio fingerprint into the words that have been synthesized by VoCo. An inaudible frequency is embedded into the sound and can only be extracted by computer analysis. “Even if you make a filter to edit that synthesized word, it won’t remove the fingerprint,” says Jin. “So we can always recover the fingerprint and say this has been edited.” He thinks that’s the best approach. A person would have to be an expert at audio editing to figure out how to remove it.

He says they could make the exact frequency and how it was programmed a secret, like a password, so people wouldn’t be able to figure it out. They haven’t actually begun to work on that part of it, though. “There is a shortcoming to that approach,” Jin says. “Because this paper is out, some people can implement VoCo and make their own version so they can just get out of any censorship. So to address that problem, our actual approach is to use deep learning to learn the pattern of the VoCo algorithm. When a VoCo-like algorithm is editing the waveform there is a trace of how it is edited inside the waveform. It’s very subtle.

“But we believe that we can actually use deep learning to find out what trace this editing process gives you. And actually in our lab experiments we’ve found that it’s pretty effective for finding VoCo-like editing. Even human editing can be detected. Like you actually re-record that voice and you manually insert that word back, we can also detect that edit as well.”

Deep learning is machine learning based on neural networks. Here’s how it works: “We create all kinds of examples of VoCo-edited words and non-edited VoCo words and we give the computer a lot of samples and teach it how to recognize whether an audio clip is edited or not — basically training it through examples,” Jin says.

The ability to turn your voice into a violin is one potential side use for the VoCo technology. Finkelstein and Jin are also excited about the possibility of using VoCo to return the original voices to people who can no longer speak. People in this situation have to use a generic artificial voice that takes a very long time to make — physicist Stephen Hawking, for example. When film critic Roger Ebert lost his voice due to cancer, a team of Scottish audio engineers worked to create a synthesized voice that actually sounded like him. They were only able to do so, however, because they had hours upon hours of recordings from his years on television. VoCo could allow people with disorders such as ALS or cancer to use their own voice much more quickly and at a lower cost.

Jin stresses that the VoCo project is still far from completion. “We’ve had a lot of very public attention recently, but I feel like there’s a lot of things to be done before we can say this is something you can use in your project. In time, we will finally make it happen, but that YouTube video last year, that was the so-called Adobe sneak, which means it’s technology that’s brewing in the lab. But it’s something still in the lab, so it’s not like a product or anything like that.” He said he wouldn’t expect it to be a product for “quite a few years.”

“We can’t comment on whether or not VoCo will be used in any Adobe product. We can only say that we hope it will,” Finkelstein says.